Hybrid pedagogies for pre- and in-service practice-based teacher education 2021: Covid-normal

Hybrid pedagogies for pre- and in-service practice-based teacher education 2021: Covid-normal

Kathryn Coleman and Nick J. Archer (studioFive Technician – Visual Arts & Music, MGSE)

What are hybrid pedagogies?

“My hypothesis is that all learning is necessarily hybrid. In classroom-based pedagogy, it is important to engage the digital selves of our students. And, in online pedagogy, it is equally important to engage their physical selves. With digital pedagogy and online education, our challenge is not to merely replace (or offer substitutes for) face-to-face instruction, but to find new and innovative ways to engage students in the practice of learning” (Stommel, 9 March, 2012).

There are some constraints in the current understanding of ‘blended pedagogies’ for the new ’COVID-normal’ physically distanced classroom. Much of the discussion is still focussed on delivery of content, rather than on digital learning experiences and how the learner will engage and participate in a relational space such as a studio, field or lab. The blend or dual mode of teaching signifies a binary, an either or rather than a hybridity of methods, tools and relational pedagogies. We know that educational technology has changed the nature of teaching; even more so as educators gained new skills, knowledge and experience in the design of learning and use of tools such as Canvas, Zoom, Teams and other integrations in these main ecosystems in 2020. These experiences have for many, facilitated new capabilities, and strengthened the connections between online and in-person coursework, but need to be furthered for the new safe ’COVID-normal’ digital classroom to be effective.

There are new affordances that require new metaphors for ‘knowing’ and ‘being’ pedagogies.

|

|

|

Fixed gear ‘town’ bike (analog) |

Multi-speed hybrid ‘off road’ bike (digital) |

We use the metaphor for the fixed town bike and the multi-speed hybrid ‘off road’ bike to explore what hybrid pedagogies might allow. We were interested in exploring this because we teach Visual Arts and Design teachers in studioFive, and we are preparing them for the Covid-normal digital studio classroom that they will encounter upon graduation. We want them to be riding multi-speed ‘off road’ bikes, prepared for innovative remote teaching. Now, both bikes are great, but they take you different places; both enable and afford movement, transportation and freedom. They both get you get from one A to B, or one place to another but only one is a hybrid design that allows the rider to shift the gears, and go from the town to the track. The multi-speed hybrid ‘off road’ bike is versatile, agile and transformative.

What might this look like?

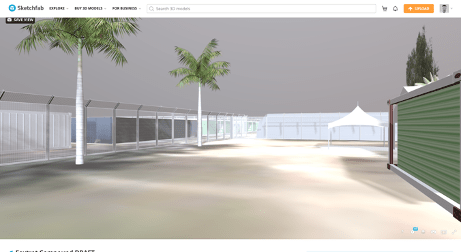

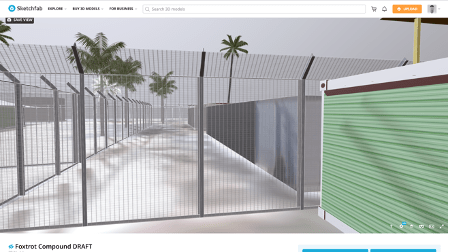

- Teaching remotely with some options for studio-based (and practitioner site-based) learning and teaching

- Simulated experiences

- Our own practice as a resource (pre-recorded, recorded on site during dual mode teaching, sustainably re-used and re-purposed)

- Blended synchronous learning (BSL): “refers to a modality where the same learning activities are experienced by students on-campus and remote students within a single group and at the same time”.

- Captured and curated in Portfolios such as OneNote Class Notebook in Teams or Padlet

- Live over table (Live polling, backchannel, and collaborative notetaking in Canvas, collaborative whiteboard spaces such as Miro, drawio, Jamboard)

- For learning in video (screencast, interactive video, lecture capture, feedback)

- As demonstration (using video recording devices, allowing you to capture lab, field, outdoor and classroom demonstrations using webcams, document cameras, handheld device etc)

- Social Interactions for seeing connections and collaborations on site (using Kaltura, YouTube, Vimeo as webcast and livestream, using Canvas Collaboration for Jigsaw activities)

- In real time (using Zoom, Teams, LivePolling and backchannel for class discussion)

- Cameras on individual small portable whiteboards/large paper and Post-it notes and markers (participating in group work virtually, if they’re Zooming in using their phone).

- Hand held devices (phones, ipads, go pro) for capturing images of Post-it notes and notes to a group whiteboard.

Fixed and Unfixed Hybrid Teaching Technologies

There are different educational technologies which can embed themselves into the classroom to aid our teaching across multiple sites including Canvas. This is the first shift to hybrid, seeing teaching as a multi-sited and socially distanced multi-sited learning space. Depending whether you are onsite or offsite the affordances of the technology required need to be considered as placemaking.

Here, we consider this in terms of fixed and unfixed technology just as the bike metaphor affords. Fixed technology is embedded in the classroom and its use off site is limited to the fact that it is dependent on a constant power source and network (wireless and ethernet). Unfixed technology can be moved either around the classroom and can be easily used off site. Recording is possible but live streaming can be difficult for these devices and they do not integrate well into software such as Zoom.

The potential affordances of hybrid pedagogies enable a shift in how we might teach and learn, assess and evidence in real-situated and digital-online spaces and sites for pre- and in-service practice-based teacher education in the covid-19 normal.

Hybrid pedagogies are speculative, relational, responsive and strategic and designed to focus on the multimodal, material, socio-technical, creative and critical multiliteracies. Hybrid pedagogies consider the intersections of technologies, pedagogies and methodologies to design for new opportunities.

What new and innovative ways are you engaging students in the practice of learning?

Recent Comments