There’s more to life than statistical significance

Statistical significance is a term sometimes used to signify finding a small P-value in the results of an analysis.

Common convention is that P-values less than 0.05 are considered to be small, and so findings with P < 0.05 are deemed to be statistically significant.

For some people, finding statistically significant results is the holy grail of statistical analysis.

Blinkered interpretation

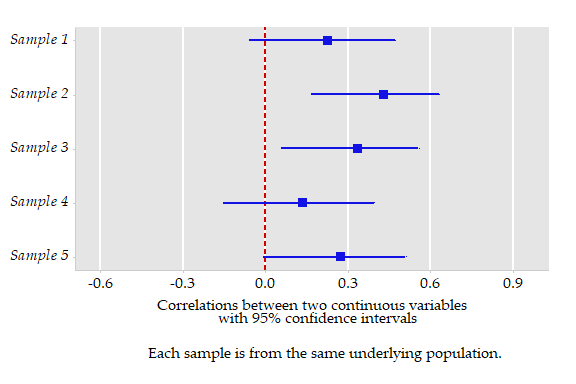

Too much focus on statistical significance can result in blunt and over-simplified interpretation of results. Consider, for example, the results from five different samples, each with a sample size of 50 – you can think of them as five different studies of the same population. The graph below shows the correlation between two continuous variables measured for each sample. A 95% confidence interval for the true correlation is shown. The results are simulated here so we know the true correlation; it is 0.3.

If we interpret the results through the blinkers of statistical significance, the results will seem to be inconsistent. The line at zero on the plot corresponds to the null hypothesis that the true correlation is zero. Using the usual definition of statistical significance, two of the results are statistically significant, and three are not. The cases where the 95% confidence interval does not include zero have corresponding P-values less than 0.05. But remember – these results were all sampled from the same population. As expected, in the plot the sample correlations (the squares) vary around the true value of 0.3.

Doing better

Any study that only tells you about the statistical significance of the findings is selling itself short.

There is important information in the estimate of the effect observed (the correlation in the example above) and the precision of the estimate of the effect (the confidence interval). We also need to know the magnitude of the P-value, rather than whether it is above or below a cutoff.

Thinking about the estimate and its precision allows you to think about the substantive meaning of the results.

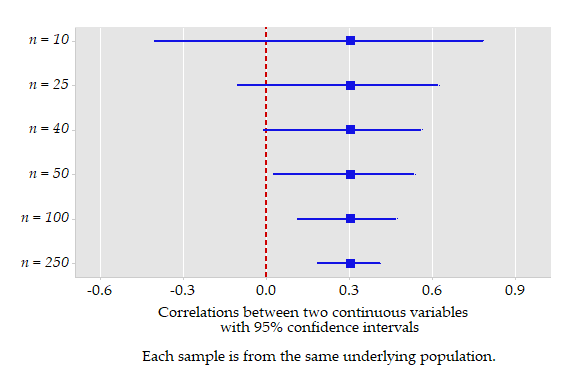

The next graph shows six hypothetical studies, with differing sample sizes, but with the same observed correlation (the squares at 0.3). The top result, for a sample size of ten, has a wide confidence interval. Again, the vertical line on the plot corresponds to a null hypothesis that the true correlation is zero. For a sample size of ten, the result is not statistically significant. However, as the sample size increases, the confidence intervals narrow. Once the sample size is 50, the null hypothesis falls outside the interval – the result is statistically significant. But here the effect estimated – the correlation – has exactly the same magnitude. The studies haven’t estimated a different effect; rather they’ve estimated the same effect with different precision. The different sample sizes here mean that there is different potential for finding small P-values.

Avoid the slippery slide

The term statistical significance is often used as short-hand. However the term signifies the relative magnitude of the P-value, nothing more. A problem arises when statistical significance is taken to mean significance of the findings in a more general sense. Findings might be described as:

- statistically significant in summarising the results

- significant in discussing the findings, and

- important in wrapping up the conclusions.

Take care to avoid this slippery slide where statistical significance morphs into importance. The findings may or may not be substantively significant or important. This will depend on the magnitude of the effects, rather than on the statistical significance per se.